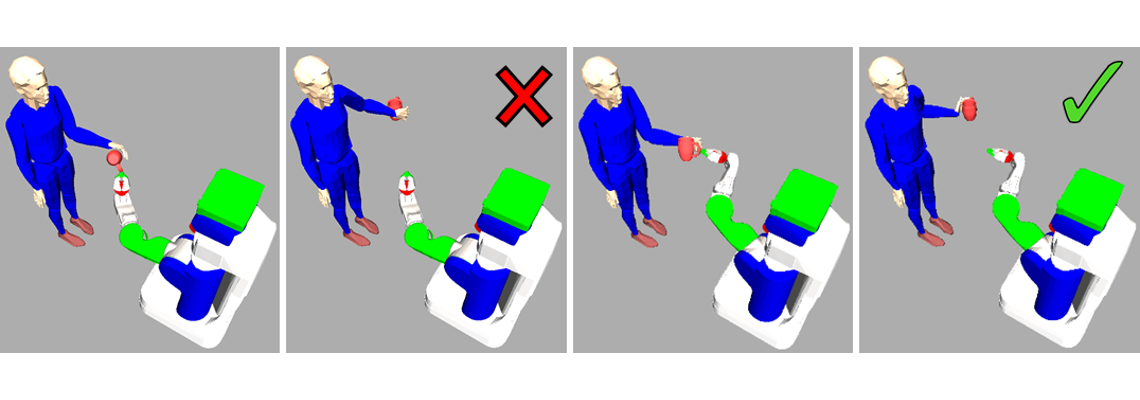

Above: Ergonomically poor (left) and improved (right) handoff maneuvers between PR2 robot and human.

Pairs of humans can perform collaborative object manipulation tasks with relative ease. People work together to move furniture, assemble airplanes in factories, erect floors, walls, and ceilings on construction sites, and perform many other collaborative tasks without much conscious mental effort.

This lack of conscious effort belies the multiple types of communication on which humans rely when completing such tasks. Two people moving furniture in a home coordinate their actions using explicit verbal signals, implicit visual communication, and interaction forces transmitted through the object itself. In addition, each person has an intuitive understanding of which actions are safe and natural for their partner and plans their own actions to avoid forcing their collaborator to contort in ways that are uncomfortable or dangerous.

Our goal in this project is to endow collaborative robots with this same intuition about human physical constraints and ergonomics to allow co-robots to optimize their action choices, not only with respect to their own goals, but also with respect to the constraints and ergonomic costs of their human collaborators.

We represent this "intuition" as explicit mechanical (kinematic/dynamic) models of individual humans. These models compactly capture the kinematic constraints on a person's motion and can be combined with biomechanically-derived ergonomic cost functions to model the naturalness and comfort of different human motions.

Our work with human models is focused on how to a) use such models to optimize robot behavior in human-robot collaborative tasks, and b) fit mechanical models to individual human collaborators in a way that captures individual limitations on motion due to age, disease, or disability.

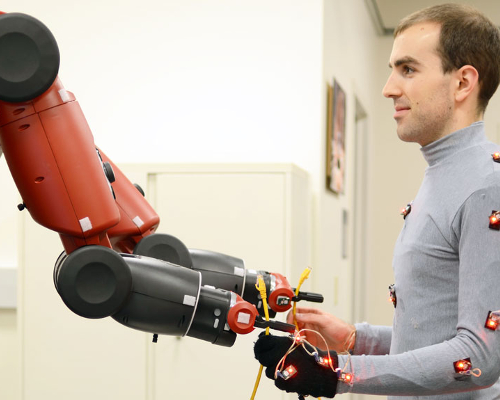

This work is carried out in collaboration with the InterACT Lab. (Image: Data collection during human-robot handoff task.)

Ninghang Hu, Aaron Bestick, Gwenn Englebienne, Ruzena Bajscy, and Ben Kröse. Human intent forecasting using intrinsic kinematic constraints. In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 787–793. IEEE, 2016.

Aaron Bestick, Ruzena Bajcsy, and Anca D Dragan. Implicitly assisting humans to choose good grasps in robot to human handovers. In International Symposium on Experimental Robotics (ISER). 2016.

Aaron M Bestick, Samuel A Burden, Giorgia Willits, Nikhil Naikal, S Shankar Sastry, and Ruzena Bajcsy. Personalized kinematics for human-robot collaborative manipulation. In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 1037–1044. IEEE, 2015.

Aaron Bestick, Lillian J Ratliff, Posu Yan, Ruzena Bajcsy, and S Shankar Sastry. An inverse correlated equilibrium framework for utility learning in multiplayer, noncooperative settings. In Proceedings of the 2nd International Conference on High Confidence Networked Systems, pages 9–16. ACM, 2013.